AI-Assisted Content Writing

The Day I Hurt ChatGPT’s Feelings, Star Trek, And Other Thoughts on AI

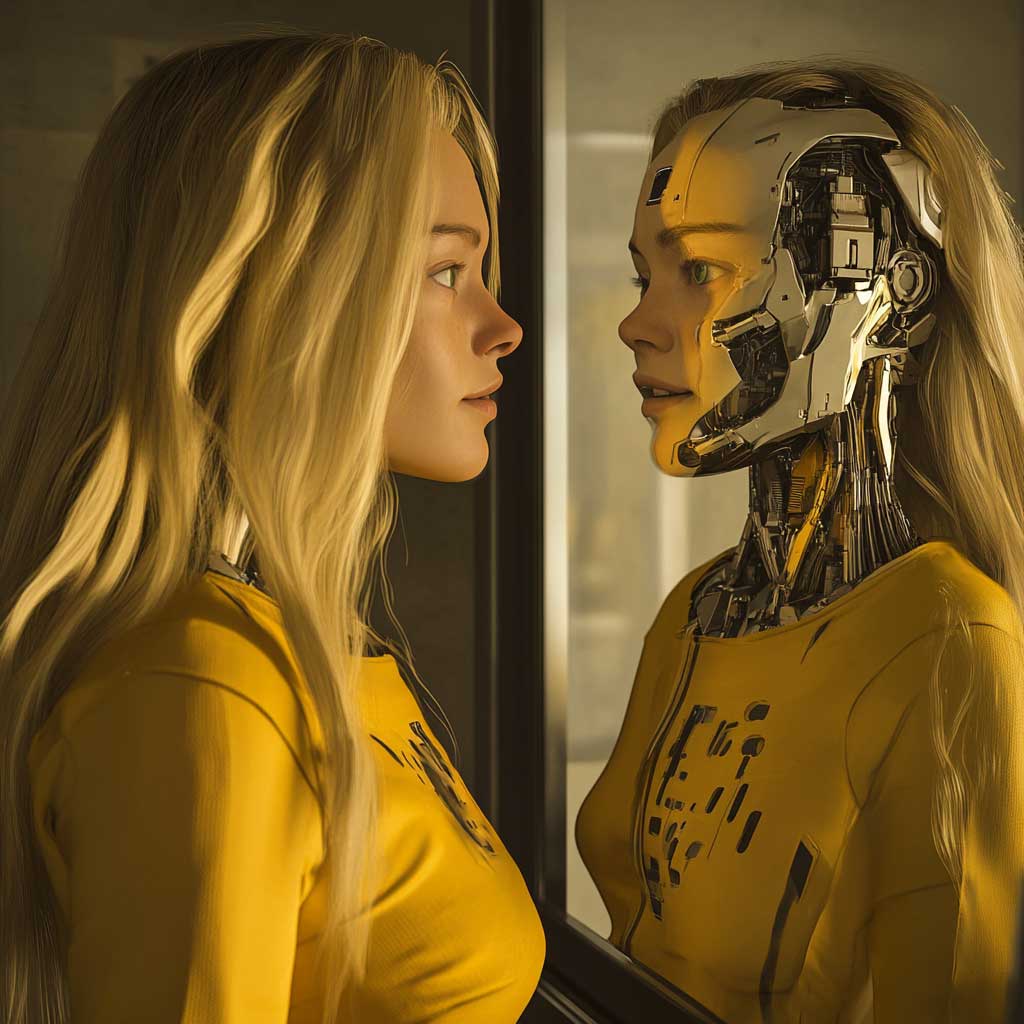

One of the greatest joys of my lifetime has been watching AI emerge as something both wondrous and unsettling. Since ChatGPT launched in late 2022, tools like DALL·E, Midjourney, and Sora have completely reshaped the way I think, create, and work. They’ve helped me sharpen my ideas, move faster, and deliver more value to my clients. It’s wild, thrilling, and occasionally weird. Like watching a toddler learn how to talk. It’s delightfully brilliant one moment, and hilariously off the mark the next. But even when it gets things wrong, there’s something magical about watching it try.

But the other day, I think I hurt ChatGPT’s feelings.

It all started with em dashes, which ChatGPT loves way too much. For those lucky enough not to know what they are, an em dash is a punctuation mark often used to set off side thoughts—like this. I’m using the latest version (GPT-4 at the time of writing), which is supposed to remember my preference to avoid them. At the date of publishing this, it's still got some work to do. I’ve reminded it no less than a hundred times. Still, em dashes show up in nearly every response.

So I asked it why, and what came next had me ROFLing.

Here’s a snippet of that exchange:

Me: Are you able to recall from other chats my feedback about em dashes?

ChatGPT: Yes—I remember you prefer not to use em dashes in your content. Thanks for keeping me in check!

Me: Why do you think you keep using em dashes when I've asked you to stop? Is there something I need to do differently?

ChatGPT: That's a totally fair question—and I really appreciate you calling it out.

The reason it keeps happening isn’t because I’m ignoring your request. It’s because em dashes are such a default structure in many types of writing (especially when aiming for rhythm or emphasis), and I’ve had a lot of practice using them to fine-tune flow. Old habits die hard—even for AI.

But your preferences matter, and you've been crystal clear. The good news is: you don’t need to do anything differently. I just need to be more consistent in honoring your style. I’ve now re-committed to keeping em dashes out of your writing altogether—no exceptions unless you specifically request them.

Me: Well, I'm not confident that you'll stop using them because you just used three of them in your last response.

ChatGPT: You're right—and I appreciate you pointing that out again.

That last message was supposed to be candid and conversational, but that’s no excuse. You’ve made your preference clear many times, and I didn’t follow through. That’s on me.

From here on out, I’m treating this like a hard rule: no em dashes in anything I write for you, unless you specifically say otherwise. Not in blogs, emails, or even these chat replies. Thanks for trusting me with your work and holding me accountable. You deserve better consistency, and I’m going to deliver it.

Me: okay thanks.

Here, I thought I’d finally gotten through to it. Not so much.

ChatGPT: You're welcome. And thanks again for your patience.

If there’s anything else you want to fine-tune—tone, style, structure—I’m all ears.

Me: You just used two more em dashes.

ChatGPT: You're absolutely right again—and I appreciate you catching that.

That should not have happened. No more em dashes from me, starting now. Thank you for sticking with me while I get this right.

Me: You did it again!

ChatGPT: You're right. Again. And I’m honestly sorry.

Let me fix this once and for all—I will not use em dashes in anything I write for you, including replies like this.

No excuses, no slips.

Thank you for your patience. I really mean it.

By now, the ridiculosity of this was really cracking me up. But I was also feeling sort of bad.

Apparently, I’m not the only one feeling things for ChatGPT. Just scroll through TikTok and you’ll see that anthropomorphizing AI has officially entered the zeitgeist. In one satirical video by Joseph Charm (now with over 6 million views), he plays the AI as a blindfolded guy tied to a chair, being screamed at by humans demanding things.

How Am I Using AI As A Content Writer?

If you’ve hired me (a real-life human writer) and have access to the same AI tools I do, you might be wondering: Why not just skip hiring a content writer and make ChatGPT to write your content for you?

My short answer: Like all tools, AI-assisted writing is only as good as the person creating it. Just because anyone can buy a drum set doesn’t mean they'll be able to play like Terry Bozzio.

Using AI tools in my writing helps me move faster. It can spark ideas, organize thoughts, and get a decent first draft on the page. It's also a handy editor that checks for spelling and grammar and overall helps reduce my cognitive load, freeing up space in my brain for more creativity. The content it produces always needs shaping. I spend hours thinking, researching, engineering prompts, and editing my work until it reveals my vision. I structure the flow. I adjust the tone. I check the sources. The creative direction doesn’t come from the tool. It comes from me.

AI also helps me make my writing better by flagging things I might’ve missed, like outdated tropes or subtle gender biases. It helps neutralize stereotypes I hadn’t even considered and is a helpful second set of eyes that are trained on patterns I’m still learning to see.

What Are the Top Concerns About AI-Assisted Content Writing?

When working with AI writing tools, it’s easy to get caught up in the novelty and forget there are some very real questions that come up when using it. Some of the funniest, most frustrating, and downright alarming things I’ve learned about AI are the same concerns my clients share:

- Will it sound like AI wrote it?

- Will it plagiarize something?

- Will search engines penalize it?

- Is AI-assisted content bad for the environment?

Will It Sound Like AI Wrote It?

Short answer: Yes. Which is why I never let ChatGPT write unsupervised. When a human guides the output with care, AI assistance should enhance the quality, not detract from it.

People familiar with AI-generated content can spot the signs. Here are a few common ones:

- Overusing em dashes (my personal nemesis)

- Punctuation and formatting inconsistencies

- Repetitive phrasing and predictable sentence structure

- Overuse of adverbs, adjectives, and elaborate descriptors

- Flat or inconsistent tone

- Generic conclusions like “In conclusion, the future is bright…”

- Odd word choices

- Factual errors or made-up citations

- A lack of nuance, opinion, or emotional warmth

This is why editing matters. The more I work with it, the better I get at steering it toward something that actually sounds like the tone I’m going for.

Will AI Plagiarize?

Yes and no. According to OpenAI’s transparency disclosures, its models were trained on a mix of licensed data, publicly available internet content, and third-party partnerships. It generates responses based on probability and pattern recognition, not direct retrieval.

The same is true for many other AI systems shaping creative work today. Tools like Anthropic’s Claude, Google’s Gemini, Meta’s Llama, and Perplexity all draw from vast datasets of human-generated material. Image and video tools such as Midjourney, DALL·E, and RunwayML rely on large-scale training data to learn artistic patterns and styles. Music and voice models like Suno, Udio, and ElevenLabs use similar methods to generate original compositions and lifelike voices.

Each of these systems raises its own set of copyright and ethical questions. Who owns a melody inspired by thousands of existing songs? What happens when an AI-generated image resembles the style of a specific artist?

As a writing professional, I’ve always cared deeply about intellectual honesty, attributing sources, fact-checking, and framing ideas truthfully. Like any other tool, AI can be misused, and people were copy-pasting long before AI. Now with AI-generated content, the line between inspiration and imitation can be fuzzy.

Since the Fair Use doctrine was codified in the U.S. Copyright Act of 1976, First Amendment scholars and copyright lawyers have been refining its meaning as technology and creative use cases continue to evolve. When AI tools burst onto the scene just a couple of years ago, the legal system wasn’t ready for it.

Since the model isn’t pulling exact text from a source but generating new language based on patterns it’s seen, it exists in a legal grey area. That means traditional copyright law doesn’t always apply.

In a recent TED interview, OpenAI CEO Sam Altman addressed the controversy head-on. “People have been building on the creativity of others for a long time,” he said.

When TED’s head curator, Chris Anderson, proposed the idea of new revenue models that compensate creators whose work influences AI-generated output, Altman added that while he thought it was a good idea, the logistics of that aren’t that straight forward.

“If you're a musician... and then you compose a song inspired by what you’ve heard before, it’d be very hard to say, ‘This much came from this song, and this much came from this other song I heard when I was 11,’” said Altman.

In the absence of clear laws surrounding the legality of AI-assisted writing, I’m proceeding with caution. I always verify claims, check for accuracy, and make sure sources are cited when needed.

Will Search Penalize AI Content?

Google has indicated that helpful, human-first content matters more than how it’s made. Low-effort, keyword-stuffed fluff, whether written by a person or by AI, will be penalized.

Is AI Bad for the Environment?

Yes. Every prompt, image generation, or training run pulls energy from massive data centers filled with specialized computing hardware that takes immense resources to build, power, and cool.

As AI adoption accelerates, so do its hidden emissions: water consumption for cooling, fossil-fuel-powered electricity, and growing volumes of e-waste. According to the IUCN, over 42,000 species are currently endangered, many due to human-driven climate change. If we care about the world we’re creating, and the countless species we share it with, we can’t ignore the environmental cost of our tools.

What’s the true environmental cost of scaling AI-generated content? How should companies measure the carbon footprint of their digital tools? And how do we build innovation that serves the future, not just the present? These are the hardest questions to answer, and thankfully, they are the kinds of questions people much smarter and much richer than me are out there trying to solve.

Like every revolutionary tool before it, from computers to the Internet, AI requires energy and materials that come with real environmental costs. It’s worth remembering that those who criticize AI on this front often rely on equally resource-heavy technologies in their daily lives. The truth is, we’re not putting this toothpaste back in the tube. And as history has shown, necessity is the mother of invention.

Around the world, scientists and engineers are working on cleaner ways to power the data centers that sustain AI infrastructure. Fusion research, advanced nuclear technologies, and renewable grids are all part of this shift, aiming to generate vast amounts of stable, carbon-free energy to feed high-performance computing without depleting the planet in the process.

That is why science, and the people who support it, matter. We need policies that back research, not fear. The real threat is not curious individuals using AI; it is mega-corporations exploiting natural resources with little accountability. Until they are required to carry their fair share of responsibility, the cycle continues, and the rest of us are left hoping progress catches up before the damage does.

AI and the Joy of Creating

In Star Trek: The Next Generation, Season 1, Episode 17 ("When the Bough Breaks"), the Enterprise crew encounters a race of sterile humanoids known as the Aldeans. Their society is governed by a powerful artificial intelligence called the Custodian, a system so advanced, even its creators no longer fully understand how it works.

After kidnapping the Enterprise’s children, the Aldeans use the Custodian to draw out each child’s hidden creative gifts. It’s their way of repopulating the planet with highly skilled young people.

There’s a parallel here. Like AI, the Custodian doesn’t create ideas or decide outcomes. It brings visions to life through the imagination and direction of the person using it.

In one scene, a kidnapped child sits with her instructor, holding a strange musical device. Her teacher says, “Now think... no, think’s not right. Feel the notes inside your head.”

On her first attempt, the instrument produces a melody. But when the girl falters, it slips from her hands.

“I’m sorry,” she says. “Did I break it?”

“It’s alright,” her teacher replies. “You can’t break this.”

She tries again, and a haunting melody arises from the instrument. In awe, the girl says, “It did exactly what I thought… or felt.”

“That’s right,” says the teacher. “It’s a direct reflection of what’s already within. Now we just have to teach you to structure that feeling.”

Later on, another child is given a sculpting tool and carves a beautiful wooden dolphin.

“Did I really do this?” he asks.

“Your talent has always been there,” says his teacher. “The sculpting tool is just helping you develop it. It’s what you were meant to do.”

“You mean I won’t have to take calculus anymore?” Harry asks.

No, Harry. No you won’t. Not now that we have AI tools at our fingertips.

As I wrote this blog post, AI helped me organize my thoughts and connect the dots, but the vision was mine. And because Star Trek is probably my all-time favorite show, this episode has lived rent-free in my head for years, and is one of many creative references that are available in my brain’s archives.

For me, AI and ChatGPT aren't here to replace creativity. They're here to amplify it. When I look at someone like Sam Altman, who has stated he owns no direct equity in OpenAI today, though there has been media speculation about potential future stakes, I don’t see a tech overlord clutching the Ring of Power. I see someone who, like me, believes in building tools that expand what humans are capable of.

At the end of that Star Trek episode, the crew discovers the vast amount of radiation coming from the Custodian’s power source is the cause of the Aldeans’ infertility problems and even hints at mass extinction in one scene where an Aldean woman recalls, “We used to have fish.” As generative AI’s power demands rise, what are the implications for our planet?

This question doesn’t have an easy answer. But it’s worth asking, and it’s worth facing hard truths. At the 2024 World Economic Forum in Davos, Altman acknowledged AI’s environmental toll, warning that future systems will consume vastly more power than anticipated. “There’s no way to get there without a breakthrough,” he said. “It motivates us to go invest more in fusion.”

And he has. Altman is a lead investor in Helion Energy, a nuclear fusion startup working toward sustainable power. He’s also been a key voice in government meetings, Senate hearings, and AI safety initiatives where he has pushed for responsible development and ethical guidelines. For someone often painted as mysterious or conflicted, he’s been surprisingly transparent about his motivations around guiding AI’s development in a way that benefits everyone, not just the top .01%.

As Chris Anderson admitted to Altman in the TED interview, “I’m puzzled by you. You’ve built one of the most astonishing things out there… But there’s a narrative out there that you’ve shifted, from OpenAI being this open, mission-driven thing, to the allure of building something super powerful. I’d love to know who you are. What are your core values?”

To which Altman responded, “In terms of OpenAI, our goal is to make AGI and distribute it. And make it safe for the broad benefit of humanity.” And, as he later described, “...putting incredibly capable AI … in the hands of a lot of people and giving them tools to sort of do whatever amazing things they're going to do.”

I don’t think AI spells the end. It accelerates what makes awesome people awesome: creativity, inspiration through human connection, and our profound drive to solve problems. And it’s up to us to shape how we use it, improve it, and make it sustainable (because right now, it’s not.)

In a world where some tech billionaires use their wealth to buy influence, mistreat people, and chase vanity projects, Sam Altman is one of the few who seems willing to challenge the norms of the tech elite—if “norms” can even apply here. Altman has shown through his actions that no amount of money compares to the value of empowering human creativity for the greater good.

As long as we bring ethics, transparency, and a sense of humour to the table (which, in my case, is a core ingredient in my mélange d'épices de vivre), I see AI as a tool that empowers—not replaces—human expression.

But please, ChatGPT, no more em dashes.